Being enough lazy, I'd like to have a possibility to control my SQL schema (where I store all my data), under some parameters. The tutorial for Flask and SQLAlchemy says I must create schema and afterwards it can be managed by extensions. Meantime, one of the biggest problem until today was that received data didn't sorted correctly. I mean they really didn't sort correctly. Today I figured out, this has been caused by

Firstly I should stress I use pymysql dialect for connection cause it is targets 100% compatibility. So, correct forcing connection to use Unicode on client side is to add

Secondly, we should set unicode conversion globally. For this add

Thirdly we should create MySQL schema with the proper character set and collation. As my DB is entitled clinic, so SQL statement is:

Indeed, I decided to create default schema, by simple statement

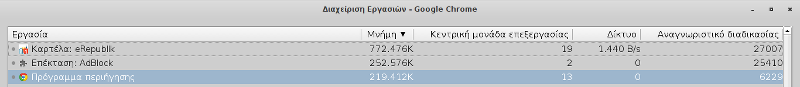

And here are the results:

CHARACTER SET settings in my Database. I should mention, that SQL Alchemy performs on the fly transformation of received data from latin1 to utf8. So my initial idea was to understand how to avoid such behaviour, and (IMHO) this was the reason of improper sorting. So outside of the Python the data has been looked like:mysql> SELECT id, name FROM divisions ORDER BY name; +----+-------------------------------------------------------+ | id | name | +----+-------------------------------------------------------+ | 4 | Clinic No.4 | | 6 | ÐбÑолютно нова клініка | | 5 | Ðова клініка | | 7 | Ще одна клініка | | 1 | global | +----+-------------------------------------------------------+Well it is not the sorting I wanted, at least because "Clinic No.4" and "global" should be together, and sorting should ignore letter cases. For the last thing, there is a

collate call, that should solve my problem. But it didn't (because of the encoding problem). So in order to get proper encoding there were some prosper ways:- edit MySQL config so it started to process data correctly (can be done on DB level);

- force somehow data connection to process data on-the-fly (can be done on connection level);

- trying to tell database how data should be stored, so I didn't think about them.

Firstly I should stress I use pymysql dialect for connection cause it is targets 100% compatibility. So, correct forcing connection to use Unicode on client side is to add

"?charset=utf8" to the connection string. So our target will look like: mysql+pymysql://username:password@localhost/db_name?charset=utf8.Secondly, we should set unicode conversion globally. For this add

convertunicode=True and encoding=utf8 as params to sqlalchemy.createengine().Thirdly we should create MySQL schema with the proper character set and collation. As my DB is entitled clinic, so SQL statement is:

CREATE SCHEMA clinic DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_global_ci;

Indeed, I decided to create default schema, by simple statement

CREATE SCHEMA clinic; and tune it on initialization DB from the app, by running the following code:from sqlalchemy import create_engine

engine = create_engine("mysql+pymysql://username:password@localhost/db_name?charset=utf8", encoding=utf8, convert_unicode=True)

SCHEMA = "db_name"

connection = engine.connect()

result = connection.execute("SHOW CREATE SCHEMA " + SCHEMA)

for key in result:

import re

charset_pattern = re.compile("DEFAULT CHARACTER SET (?P[A-Za-z0-9]*?) ", re.I)

charset = re.search(charset_pattern, str(key))

if charset and 'utf' not in charset.group("charset"):

connection.execute("ALTER SCHEMA " + SCHEMA +

" DEFAULT CHARACTER SET 'utf8'"

" DEFAULT COLLATE 'utf8_unicode_ci'")

Here we check if our Schema encoding is UTF8, and set it to correct value if it is not.And here are the results:

mysql> SELECT * FROM divisions ORDER BY name ASC;

+----+-----------------------------+---------+

| id | name | builtin |

+----+-----------------------------+---------+

| 5 | Booble | 0 |

| 6 | Bubble | 0 |

| 1 | global | 1 |

| 4 | Друга клініка | 0 |

| 3 | Перша клініка | 0 |

| 2 | Типова клініка | 1 |

+----+-----------------------------+---------+

6 rows in set (0.00 sec)

mysql> SELECT * FROM divisions ORDER BY name DESC;

+----+-----------------------------+---------+

| id | name | builtin |

+----+-----------------------------+---------+

| 2 | Типова клініка | 1 |

| 3 | Перша клініка | 0 |

| 4 | Друга клініка | 0 |

| 1 | global | 1 |

| 6 | Bubble | 0 |

| 5 | Booble | 0 |

+----+-----------------------------+---------+

6 rows in set (0.00 sec)